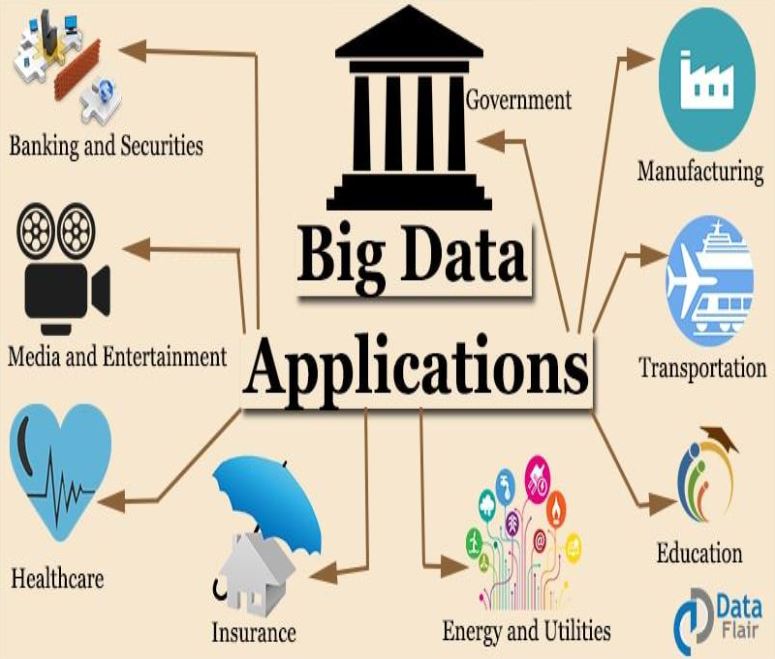

What is Big Data?

In very simple terms, Big data is data that exceeds the processing capacity of traditional databases. The data is too big to be processed by a single machine. New and innovative methods are required to process and store such large volumes of data.

Big data is a blanket term for the non-traditional strategies and technologies needed to gather, organize, process, and gather insights from large datasets. It is a combination of structured, semistructured and unstructured data collected by organizations that can be mined for information and used in machine learning projects, predictive modeling and other advanced analytics applications.

Why Every Organization May Need Big Data Strategy?

Despite the hype, many organizations don't realize they have a big data problem or they simply don't think of it in terms of big data. In general, an organization is likely to benefit from big data technologies when existing databases and applications can no longer scale to support sudden increases in volume, variety, and velocity of data.

Failure to correctly address big data challenges can result in escalating costs, as well as reduced productivity and competitiveness. On the other hand, a sound big data strategy can help organizations reduce costs and gain operational efficiencies by migrating heavy existing workloads to big data technologies; as well as deploying new applications to capitalize on new opportunities.

Characteristics of Big Data

In 2001, Gartner's Doug Laney first presented what became known as the 'three Vs of big data' to describe some of the characteristics that make big data different from other data processing:

Volume Ranges from terabytes to petabytes of data. Often, because the work requirements exceed the capabilities of a single computer, this becomes a challenge of pooling, allocating, and coordinating resources from groups of computers.

Velocity Increasingly, businesses have stringent requirements from the time data is generated, to the time actionable insights are delivered to the users. Data is constantly being added, massaged, processed, and analyzed in order to keep up with the influx of new information and to surface valuable information early when it is most relevant. Therefore, data needs to be collected, stored, processed, and analyzed within relatively short windows - ranging from daily to real-time

Variety Data can be ingested from internal systems like application and server logs, from social media feeds and other external APIs, from physical device sensors, and from other providers. Big data seeks to handle potentially useful data regardless of where it's coming from by consolidating all information into a single system.

Veracity It refers to the degree of accuracy in data sets and how trustworthy they are. Raw data collected from various sources can cause data quality issues that may be difficult to pinpoint. If they aren't fixed through data cleansing processes, bad data leads to analysis errors that can undermine the value of business analytics initiatives

Variability Variation in the data leads to wide variation in quality. Additional resources may be needed to identify, process, or filter low quality data to make it more useful.

Value Not all the data that's collected has real business value or benefits. As a result, organizations need to confirm that data relates to relevant business issues before it's used in big data analytics projects. Sometimes, the systems and processes in place are complex enough that using the data and extracting actual value can become difficult.